Explainer: What is a File System?

Have you e'er needed to format a new hard drive or USB drive, and were given the option of selecting from acronyms similar Fatty, FAT32, or NTFS? Or did you once try plugging in an external device, merely for your operating arrangement to have trouble understanding it? Here'southward another i... do you sometimes but get frustrated past how long it takes your OS to find a item file while searching?

If you have experienced whatsoever of the higher up, or but just pointed-and-clicked your way to find a file or application on your computer, then you've had offset-hand experience into what a file system is.

Many people might not employ an explicit methodology for organizing their personal files on a PC (explainer_file_system_final_actualfinal_FinalDraft.docx). However, the abstruse concept of organizing files and directories for any device with persistent memory needs to be very systematic when reading, writing, copying, deleting, and interfacing with data. This task of the operating arrangement is typically assigned to the file system.

There are many dissimilar ways to organize files and directories. If y'all tin but imagine a physical file chiffonier with papers and folders, you lot would need to consider many things when coming upwardly with a system for retrieving your documents. Would you lot organize the folders in alphabetical, or reverse alphabetical order? Would yous prioritize normally accessed files in the front end or dorsum of the file cabinet? How would yous bargain with duplicates, whether on purpose (for back-up) or accidental (naming ii files exactly the same way)? These are just a few analogous questions that demand answering when developing a file organization.

In this explainer, we'll take a deep dive into how modernistic day computers tackle these problems. We'll go over the diverse roles of a file system in the larger context of an operating organization and physical drives, in addition to how file systems are designed and implemented.

Persistent Data: Files and Directories

Modern operating systems are increasingly complex, and need to manage diverse hardware resource, schedule processes, virtualize retentivity, amongst many other tasks. When it comes to data, many hardware advances such every bit caches and RAMs take been designed to speed up access time, and ensure that oft used data is "nearby" the processor. Even so, when you lot power down your computer, only the information stored on persistent devices, such as hard disk drives (HDDs) or solid-state storage devices (SSDs), will remain beyond the power off cycle. Thus, the Bone must accept extra care of these devices and the information onboard, since this is where users volition keep data they really care most.

2 of the most important abstractions developed over time for storage are the file and the directory. A file is a linear array of bytes, each of which you tin can read or write. While at the user space we can think of clever names for our files, underneath the hood there are typically numerical identifiers to go on rail of file names. Historically, this underlying data structure is often referred to as its inode number (more on that later). Interestingly, the Os itself does not know much well-nigh the internal construction of a file (i.east., is it a picture show, video, or text file); in fact, all it needs to know is how to write the bytes into the file for persistent storage, and brand sure information technology can retrieve it later when called upon.

The 2nd chief abstraction is the directory. A directory is actually just a file underneath the hood, but contains a very specific ready of data: a listing of user-readable names to depression-level name mappings. Practically speaking, that means information technology contains a listing of other directories or files, which altogether can form a directory tree, nether which all files and directories are stored.

Such an arrangement is quite expressive and scalable. All you need is a pointer to the root of the directory tree (physically speaking, that would be to the first inode in the organization), and from there y'all tin access whatever other files on that disk partition. This system also allows you to create files with the aforementioned proper noun, and then long as they do non take the aforementioned path (i.due east., they autumn nether dissimilar locations in the file-organization tree).

Additionally, you tin can technically name a file anything you want! While it is typically conventional to denote the type of file with a period separation (such as .jpg in film.jpg), that is purely optional and isn't mandatory. Some operating systems such as Windows heavily advise using these conventions in order to open up files in the respective awarding of choice, but the content of the file itself isn't dependent on the file extension. The extension is but a hint for the OS on how to interpret the bytes contained inside a file.

Once you have files and directories, y'all need to exist able to operate on them. In the context of a file system, that means being able to read the information, write information, manipulate files (delete, motion, copy, etc.), and manage permissions for files (who can perform all the operations in a higher place?). How are modern file systems implemented to allow for all these operations to happen chop-chop and in a scalable fashion?

File Organization Organization

When thinking about a file system, there are typically two aspects that need to be addressed. The first is the data structures of the file system. In other words, what types of on-disk structures are used by the file system to organize its data and metadata? The 2nd aspect is its access methods: how can a process open, read, or write onto its structures?

Allow's begin by describing the overall on-disk organization of a rudimentary file organization.

The commencement thing you need to do is to split up your disk into blocks. A commonly used block size is iv KB. Let's assume you have a very small disk with 256 KB of storage infinite. The first stride is to divide this infinite evenly using your block size, and identify each cake with a number (in our case, labeling the blocks from 0 to 63):

Now, let'southward break up these blocks into various regions. Let's fix aside most of the blocks for user data, and call this the information region. In this instance, let's fix blocks 8-63 as our data region:

If yous noticed, nosotros put the data region in the latter part of the deejay, leaving the first few blocks for the file system to utilise for a unlike purpose. Specifically, we want to employ them to runway information about files, such as where a file might exist in the data region, how large is a file, its possessor and access rights, and other types of information. This information is a cardinal slice of the file system, and is called metadata.

To shop this metadata, nosotros will use a special data structure chosen an inode. In the running instance, let's set aside 5 blocks as inodes, and call this region of the disk the inode tabular array:

Inodes are typically not that big, for example 256 bytes. Thus, a 4KB block tin can hold most 16 inodes, and our unproblematic file system higher up contains fourscore total inodes. This number is really meaning: information technology means that the maximum number of files in our file system is 80. With a larger disk, y'all can certainly increase the number of inodes, directly translating to more files in your file arrangement.

There are a few things remaining to complete our file system. We also demand a way to keep track of whether inodes or information blocks are gratis or allocated. This allocations structure tin can be implemented as two separate bitmaps, one for inodes and some other for the information region.

A bitmap is a very simple information structure: each fleck corresponds to whether an object/cake is free (0) or in-use (one). Nosotros can assign the inode bitmap and data region bitmap to their own block. Although this is overkill (a block can be used to track up to 32 KB objects, merely we only have eighty inodes and 56 data blocks), this is a convenient and uncomplicated way to organize our file system.

Finally, for the last remaining block (which, coincidentally, is the outset block in our deejay), nosotros need to have a superblock. This superblock is sort of a metadata for the metadata: in the cake, we can shop information about the file organization, such as how many inodes there are (80) and where the inode block is institute (block 3) and and then forth. We can also put some identifier for the file system in the superblock to empathize how to translate nuances and details for different file organization types (e.g., we can note that this file system is a Unix-based, ext4 filesystem, or perhaps an NTFS). When the operating arrangement reads the superblock, information technology tin can then have a blueprint for how to interpret and access different data on the disk.

Calculation a superblock (Due south), an inode bitmap (i), and a information region bitmap (d) to our simple organisation.

The Inode

And so far, we've mentioned the inode information structure in a file arrangement, but accept not withal explained what this critical component is. An inode is short for an index node, and is a historical name given from UNIX and earlier file systems. Practically all modern 24-hour interval systems use the concept of an inode, but may call them unlike things (such equally dnodes, fnodes, etc).

Fundamentally though, the inode is an indexable data structure, meaning the information stored on it is in a very specific mode, such that yous can jump to a particular location (the index) and know how to translate the next set of bits.

A particular inode is referred to past a number (the i-number), and this is the low-level name of the file. Given an i-number, yous can look upwardly information technology'southward information by quickly jumping to its location. For example, from the superblock, we know that the inode region starts from the 12KB accost.

Since a disk is not byte-addressable, nosotros have to know which block to admission in order to discover our inode. With some fairly simple math, we tin can compute the block ID based on the i-number of involvement, the size of each inode, and the size of a block. Subsequently, we can detect the start of the inode inside the block, and read the desired data.

The inode contains virtually all of the information you need about a file. For example, is it a regular file or a directory? What is its size? How many blocks are allocated to it? What permissions are immune to access the file (i.e., who is the owner, and who can read or write)? When was the file created or last accessed? And many other flags or metadata about the file.

Ane of the most important pieces of information kept in the inode is a pointer (or list of pointers) on where the data resides in the data region. These are known as directly pointers. The concept is dainty, but for very large files, you might run out of pointers in the modest inode data construction. Thus, many modern systems have special indirect pointers: instead of directly going to the information of the file in the data region, you tin use an indirect block in the data region to expand the number of direct pointers for your file. In this way, files can go much larger than the limited set of straight pointers available in the inode data structure.

Unsurprisingly, you tin utilize this approach to back up even larger data types, past having double or triple indirect pointers. This type of file system is known as having a multi-level index, and allows a file organisation to back up big files (think in the gigabytes range) or larger. Common file systems such as ext2 and ext3 use multi-level indexing systems. Newer file systems, such equally ext4, take the concept of extents, which are slightly more complex pointer schemes.

While the inode data structure is very pop for its scalability, many studies have been performed to understand its efficacy and extent to which multi-level indices are needed. One study has shown some interesting measurements on file systems, including:

- Well-nigh files are actually very small-scale (2KB is the most mutual size)

- The boilerplate file size is growing (almost 200k is the boilerplate)

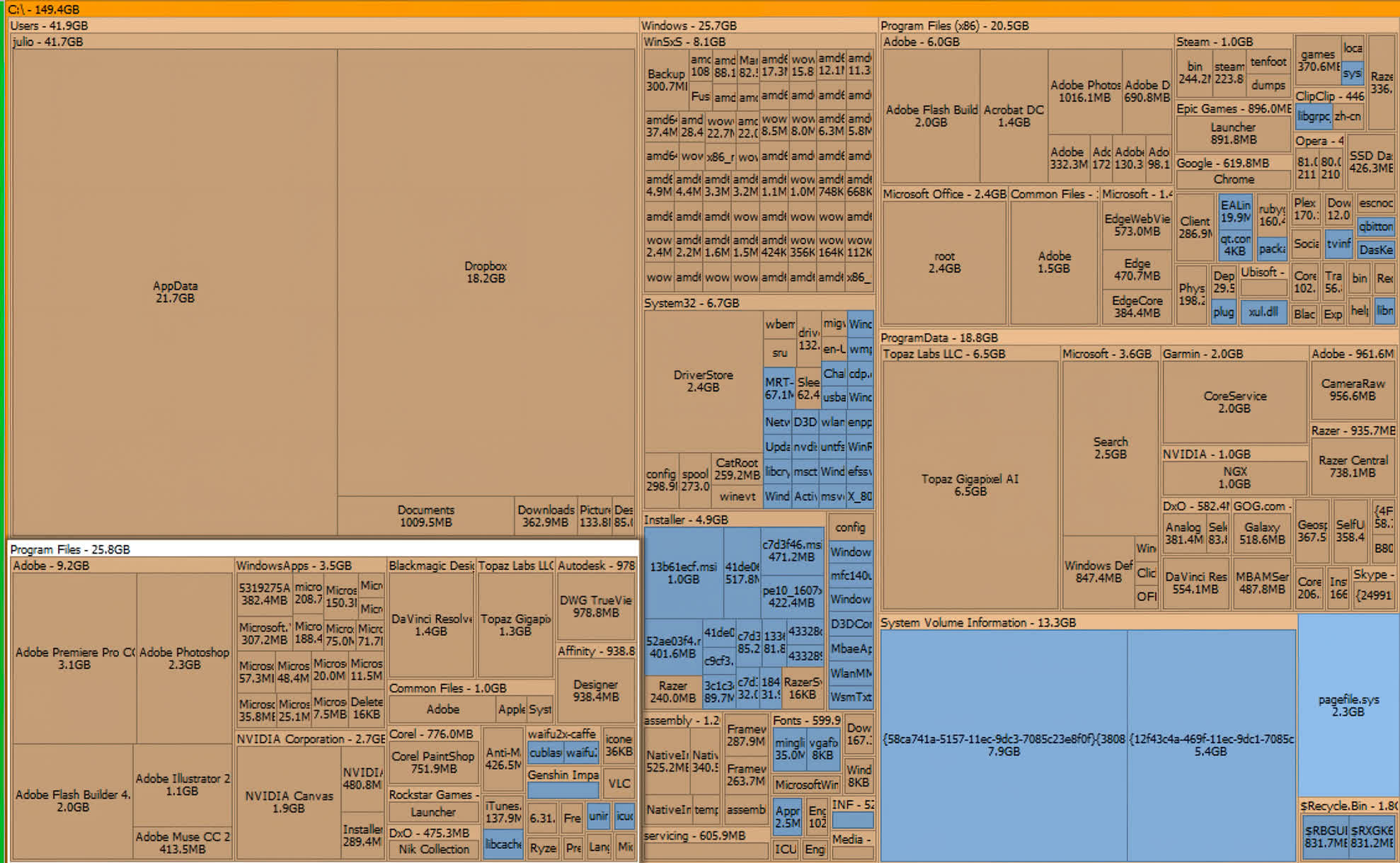

- Most bytes are stored in large files (a few big files apply near of the space)

- File systems comprise lots of files (almost 100k on average)

- File systems are roughly half full (fifty-fifty as disks grow, files systems remain ~l% full)

- Directories are typically small-scale (many have few entries, xx or fewer)

This all points to the versatility and the scalability of the inode data structure, and how it supports well-nigh modern systems perfectly fine. Many optimizations have been implemented for speed and efficiency, only the core structure has inverse little over recent times.

Directories

Under the hood, directories are simply a very specific type of file: they contain a list of entries using (entry proper noun, i-number) pairing arrangement. The entry number is typically a human being-readable name, and the respective i-number captures its underlying file-system "proper noun."

Each directory typically too contains two boosted entries across the list of user names: one entry is the "electric current directory" arrow, and the other is the parent directory pointer. When using a command line last, you lot can "change directory" by typing

- cd [directory or file name]

or move up a directory by using

- cd ..

where ".." is the abstract name of the parent directory pointer.

Since directories are typically just "special files," managing the contents of a directory is usually as unproblematic as adding and deleting pairings inside the file. A directory typically has its ain inode in a linear file organization tree (as described higher up), just new data structures such equally B-trees have been proposed and used in some modern file systems such as XFS.

Access Methods and Optimizations

A file organization would be useless if you could non read and write data to information technology. For this footstep, you lot need a well divers methodology to enable the operating system to access and interpret the bytes in the information region.

The basic operations on a file include opening a file, reading a file, or writing to a file. These procedures require a huge number of input/output operations (I/O), and are typically scattered over the disk. For example, traversing a file system tree from the root node to the file of involvement requires jumping from an inode to a directory file (potentially multi-indexed) to the file location. If the file does non exist, then sure additional operations such as creating an inode entry and assigning permissions are required.

Many technologies, both in hardware and software, accept been developed to meliorate admission times and interactions with storage. A very common hardware optimization is the apply of SSDs, which have much improved admission times due to their solid state backdrop. Hard drives, on the other hand, typically accept mechanical parts (a moving spindle) which means in that location are physical limitations on how fast you tin can "jump" from one part of the disk to another.

While SSDs provide fast disk accesses, that typically isn't enough to accelerate reading and writing data. The operating organisation will commonly employ faster, volatile memory structures such as RAM and caches to brand the data "closer" to the processor, and advance operations. In fact, the operating arrangement itself is typically stored on a file organization, and one major optimization is to keep mutual read-only OS files perpetually in RAM in order to ensure the operating organization runs speedily and efficiently.

Without going into the nitty-gritty of file operations, in that location are some interesting optimizations that are employed for data management. For instance, when deleting a file, 1 common optimization is to simply delete the inode pointing to the information, and effectively marking the disk regions as "free retentivity." The data on disk isn't physically wiped out in this instance, just access to it is removed. In order to fully "delete" a file, sure formatting operations can be done to write all zeroes (0) over the disk regions being deleted.

Another common optimization is moving data. Equally users, nosotros might want to motility a file from one directory to another based on our personal organization preferences. The file system, however, just needs to change minimal data in a few directory files, rather than actually shifting bits from ane place to some other. By using the concept of inodes and pointers, a file system can perform a "move" operation (within the aforementioned disk) very apace.

When it comes to "installing" applications or games, this only ways copying over files to a specific location and setting global variables and flags for making them executable. In Windows, an install typically asks for a directory, and then downloads the data for running the application and places it into that directory. There is naught particularly special about an install, other than the automated mechanism for writing many files and directories from an external source (online or physical media) into the disk of choice.

Common File Systems

Modern file systems take many detailed optimizations that work hand-in-hand with the operating system to improve performance and provide various features (such equally security or big file support). Some of the virtually popular file systems today include FAT32 (for flash drives and, previously, Windows), NTFS (for Windows), and ext4 (for Linux).

At a high level, all these file systems take similar on-deejay structures, but differ in the details and the features that they support. For example, the FAT32 (File Allocation Table) format was initially designed in 1977, and was used in the early days of personal computing. It uses a concept of a linked list for file and directory accesses, which while simple and efficient, can exist slow for larger disks. Today, it is a commonly used format for flash drives.

The NTFS (New Technology File System) developed by Microsoft in 1993 addressed many of the humble ancestry of FAT32. Information technology improves performance by storing various additional metadata most files and supports diverse structures for encryption, pinch, sparse files, and organization journaling. NTFS is yet used today in Windows 10 and 11. Similarly, macOS and iOS devices utilise a proprietary file organization created by Apple, HFS+ (also known as Mac Os Extended) used to be the standard before they introduced the Apple File Organisation (APFS) relatively recently in 2022 and is improve optimized for faster storage mediums as well as for supporting avant-garde capabilities like encryption and increased information integrity.

The fourth extended filesystem, or ext4, is the 4th iteration of the ext file system adult in 2008 and the default arrangement for many Linux distributions including Debian and Ubuntu. It can support large file sizes (upwards to sixteen tebibytes), and uses the concept of extents to further enhance inodes and metadata for files. It uses a delayed allocation system to reduce writes to disk, and has many improvements for filesystem checksums for data integrity, and is also supported by both Windows and Mac.

Each file organisation provides its own set of features and optimizations, and may take many implementation differences. All the same, fundamentally, they all deport out the same functionality of supporting files and interacting with data on deejay. Certain file systems are optimized to work better with dissimilar operating systems, which is why the file system and operating system are very closely intertwined.

Next-Gen File Systems

Ane of the most important features of a file organization is its resilience to errors. Hardware errors can occur for a variety of reasons, including wear-out, random voltage spikes or droops (from processor overclocking or other optimizations), random alpha particle strikes (likewise called soft errors), and many other causes. In fact, hardware errors are such a plush problem to identify and debug, that both Google and Facebook take published papers about how of import resilience is at scale, particularly in information centers.

I of the most important features of a file organization is its resilience to errors.

To that cease, virtually next-gen file systems are focusing on faster resiliency and fast(er) security. These features come at a cost, typically incurring a operation penalty in order to comprise more than redundancy or security features into the file organisation.

Hardware vendors typically include diverse protection mechanisms for their products such equally ECC protection for RAM, RAID options for disk redundancy, or full-blown processor back-up such as Tesla'southward recent Fully Self-Driving Chip (FSD). Still, that additional layer of protection in software via the file system is just as of import.

Microsoft has been working on this problem for many years now in its Resilient File Organization (ReFS) implementation. ReFS was originally released for Windows Server 2022, and is meant to succeed NTFS. ReFS uses B+ copse for all their on-disk structures (including metadata and file data), and has a resiliency-first arroyo for implementation. This includes checksums for all metadata stored independently, and an allocation-on-write policy. Effectively, this reduces the burden on administrators from needing to run periodic error-checking tools such every bit CHKDSK when using ReFS.

In the open-source world, Btrfs (pronounced "meliorate FS" or "Butter FS") is gaining traction with similar features to ReFS. Again, the master focus is on mistake-tolerance, self-healing properties, and easy assistants. It also provides better scalability than ext4, allowing roughly 16x more data back up.

Summary

While there are many different file systems in use today, the main objective and high-level concepts have changed little over fourth dimension. To build a file system, yous need some basic information about each file (metadata) and a scalable storage structure to write and read from various files.

The underlying implementation of inodes and files together form a very extensible arrangement, which has been fine-tuned and tweaked to provide us with modern file systems. While we may non think nearly file systems and their features in our twenty-four hours-to-day lives, it is a true testament to their robustness and scalable design which accept enabled united states of america to enjoy and admission our digital data on computers, phones, consoles, and various other systems.

More Tech Explainers

- What is Crypto Mining?

- What is Chip Binning?

- Explainer: L1 vs. L2 vs. L3 Cache

- What Is a Checksum, and What Can You lot Practice With It?

- Display Tech Compared: TN vs. VA vs. IPS

Masthead paradigm: Jelle Dekkers

Source: https://www.techspot.com/article/2377-file-system-explainer/

Posted by: loudermilkpanytherry.blogspot.com

0 Response to "Explainer: What is a File System?"

Post a Comment